Paper URL: https://openaccess.thecvf.com/content/CVPR2025/papers/Sun_Unsupervised_Continual_Domain_Shift_Learning_with_Multi-Prototype_Modeling_CVPR_2025_paper.pdf

Code URL: TBDConference: CVPR’25

TL;DR

To overcome the adaptivity gap issue in Unsupervised Continual Domain Shift Learning, the paper proposes Multi-Prototype Modeling (MPM) , a method comprises two main parts: Multi-Prototype Learning (MPL) and Bi-Level Graph Enhancer (BiGE).

Introduction

What is Unsupervised Continual Domain Shift Learning (UCDSL)?

Unsupervised Continual Domain Shift Learning (UCDSL) aims to adapt a pre-trained model to dynamically shifting target domains without access to labeled data from either the source or target domains.

Existing Methods of UCDSL

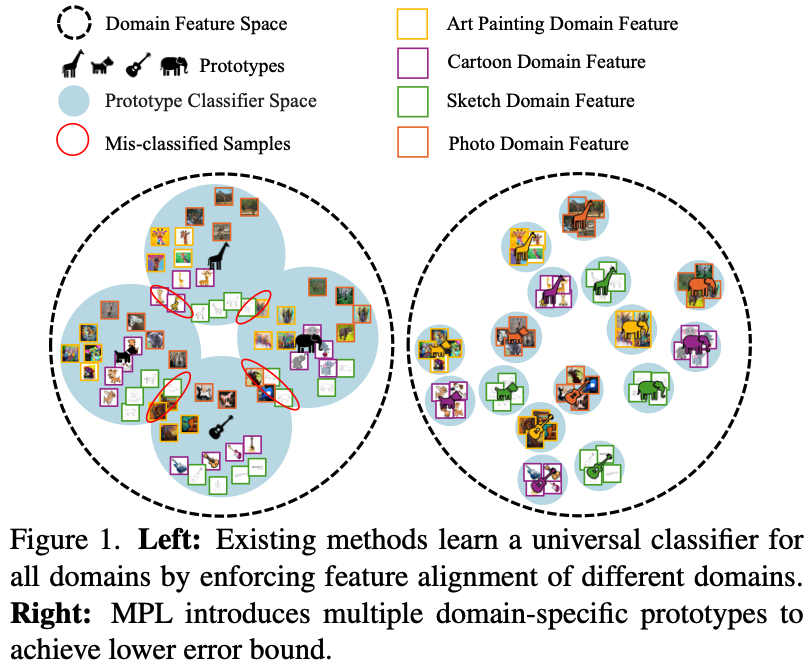

Existing methods attempt to learn a universal classifier for all domains by enforcing feature alignment across different domains. ==However, this assumption is not guaranteed and the classifier may deviate significantly from the optimal classifier for a specific target domain.==

As shown in the left image, existing methods pursue the alignment of the same classes across all domains. This leads to the deviation from the optimal classifier for each domain, causing mis-classification.

Motivation

To address this issue, the paper ==introduces multiple domain-specific prototypes to enrich the hypothesis space and achieve more comprehensive representations==, as shown in the right image.

This brings the following benefits:

- MPL enriches the hypothesis space by introducing multiple harmoniously composited domain-specific prototypes, leading to improved generalization for unseen domains.

- MPL preserves complementary information from multiple domains to reduce the adaptivity gap, resulting in enhanced domain adaptation ability.

- During adaptation, MPL explicitly preserves knowledge from previously encountered domains by retaining the corresponding prototypes.

To enhance the feature representations, Bi-Level Graph Enhancer is introduced as well. It leverages complementary information from two orthogonal perspectives: domain-wise and category-wise.